This post is a little bit niche, given that it’s addressed to people who may have seen the DeepMind x UCL Lecture Series on Reinforcement Learning and were wondering how the answers to the end-of-lecture questions were worked out. It took me a fair while to figure them out myself; it’s been a long time since I’ve needed to use integral calculus. But it may be useful for someone, so here it is.

Introduction

There’s a great series of lectures on Reinforcement Learning available on YouTube, a collaboration between Google DeepMind and the UCL Centre for Artificial Intelligence. The first lecture is a broad overview of the topic, introducing the core concepts of Agents, States, Policy, Value Functions and so on. The second lecture picks up from this and starts going into the mathematics behind some of the action selection Policy algorithms. This lecture concludes with an exercise to demonstrate worked examples of these policies in action on a very simple problem.

Policy Example Question

The example question appears close to the end of the video (at timestamp 2:03:45). We are given two actions (a and b) so that at time step 1, action a is selected and we observe a reward of 0. At time step 2, action b is selected and we observe a reward of +1.

The question to answer is what is the probability that we will select action a under the different selection algorithms, i.e.

Greedy Policy

This selection policy is very simple. The action selected is the one that has the highest Action Value (Q) observed to date:

Where:

The function I indicates if the action has been chosen at the particular time (it’s an Indicator function) and the function R is the observed reward.

So for action a,

For action b,

Therefore, action a will not be selected by the Greedy Policy, .

-Greedy Policy

-Greedy Policy

The -Greedy Policy introduces a random element in to the selection to avoid getting stuck like the Greedy Policy.

With the probability , behave like the Greedy Policy. In this case, action b would be selected.

With probability , select an action at random. In this problem there are two actions so they have a equal probability of being select. So select either a with

or select b

.

So to work out what the probability of selecting action a is, we’ll combine the two probabilities:

Upper Confidence Bounds (UCB) Policy

UCB is an interesting policy that tries to balance the exploration/exploitation trade off via the hyperparamter C. If C is set to 0, then you have a greedy policy. As C increases, you are giving more opportunity to exploration, in other words, selecting alternative actions.

, where

Both and

have the same

for any value of C we select, since both actions have been selected once (the nt(x) part of the function counts the number of times the action x has been chosen). Therefore, in this particular problem, UCB behaves the same as the greedy policy.

As before, will be selected, so

Thompson Sampling

A probability matching policy selects an action based on the probability that it is the best action. Thompson Sampling does this by calculating a probability of the expected reward for each action, and then selecting the action that has the maximum.

This question posed a bit of difficulty. There is an error in the video presentation at time 1:47:50, which caused me a bit of confusion. At that point, the procedure for updating the posterior for the beta distribution Beta(xa,ya) is introduced, but it is incorrect. The posterior updates are swapped around. The correct update should be:

, when Rt = 1 (action chosen, reward observed)

, when Rt = 0 (action chosen, no reward observed)

Therfore, the values of and

for the prior actions selected are:

| Action a | Action b | |

The values of and

at time

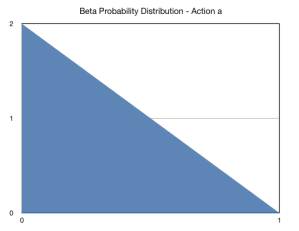

give the following Beta probability distributions for action a and action b.

For completion, the expected values of these distributions are:

For Action a, this is 1/3 and for Action b, this is 2/3.

Let’s look at the Probability Density Functions for each of the actions. The standard Beta Distribution is given by:

In particular, the beta function itself, “B” (the denominator of the fraction in that equation) is expressed by:

For action “a”, the value of the Beta function is:

For action “b”, the value of the Beta function is:

Now we can calculate the Probability Density Functions for both actions:

PDF for action a:

PDF for action b (using y instead of x as the random variable):

The joint PDF for the actions is given by:

Now that we have the joint probability for the actions, we can calculate the probability that the next action selected will be action “a” as follows:

So using Thompson Sampling, the probability of selecting action a is:

Note that in the video, the answer is given as 1/4. This is corrected later in the comments.

Conclusion

Hopefully, you found these answers and explanations useful. If so, please let me know in the comments below. Please also let me know if you find any errors and I’ll gladly correct them.